PROJECT MediGesture

CLIENTS Medi

YEAR 2020

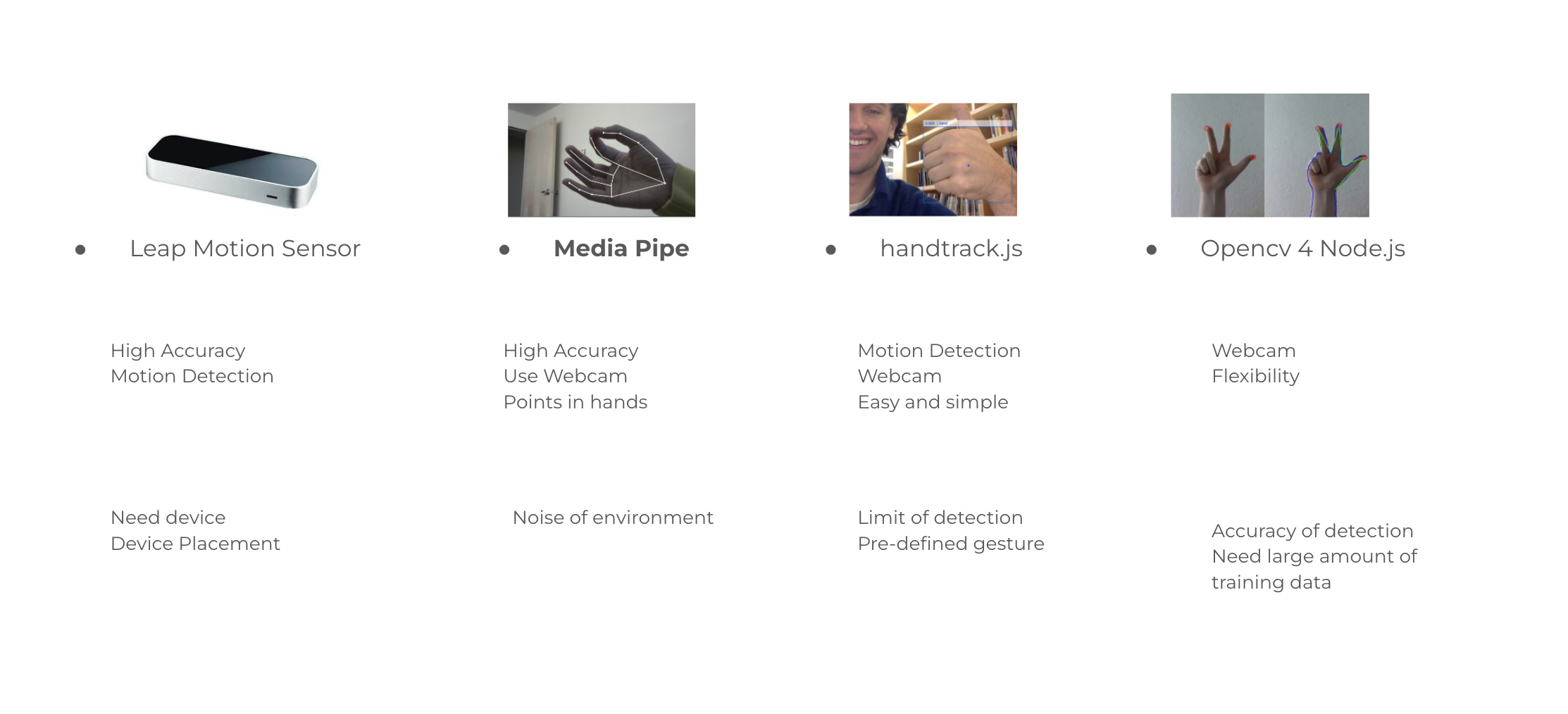

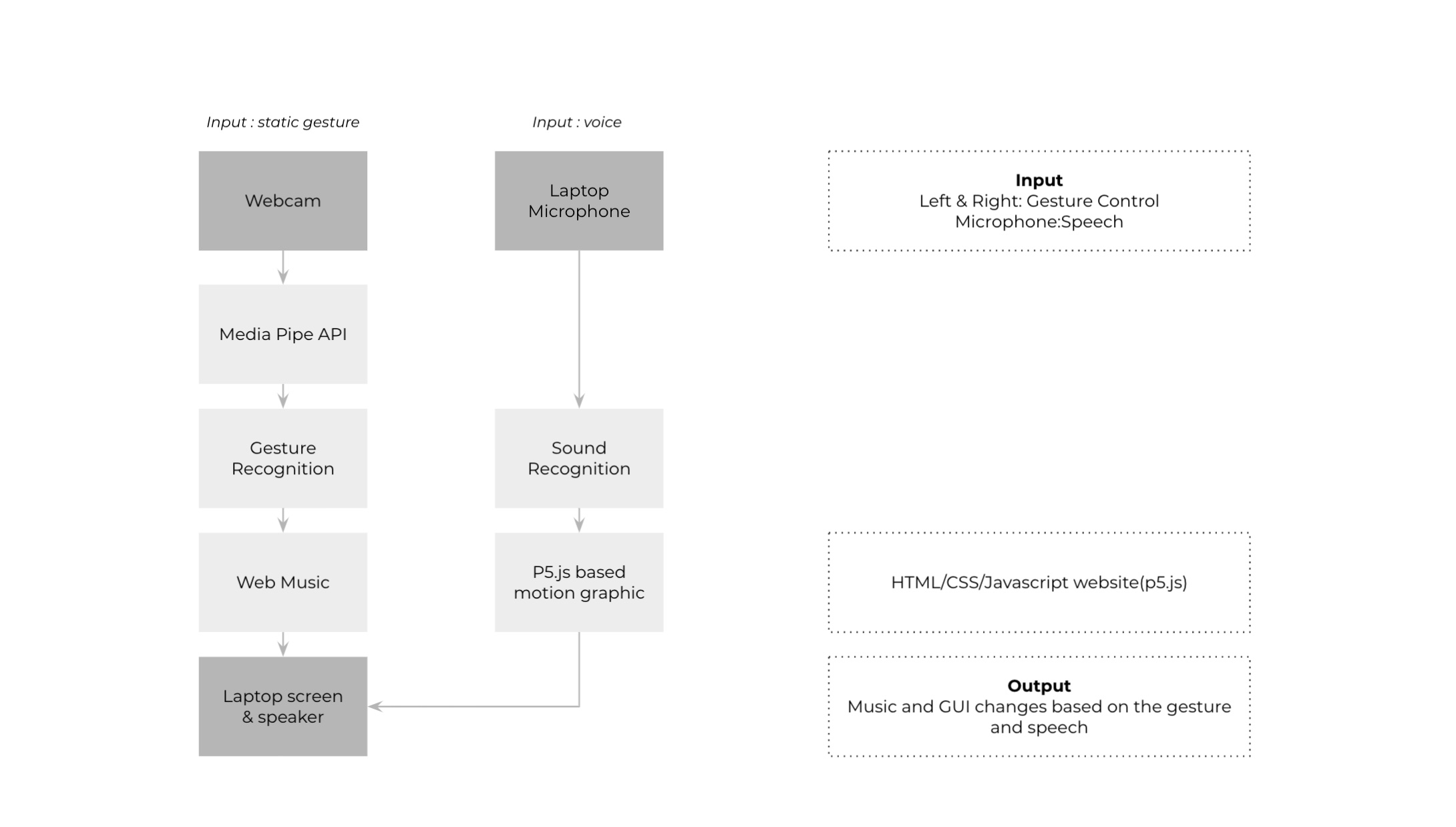

ROLE Concept Design, gesture detection (MediaPipe.js), sound detection, interface (HTML/CSS/Javascript)

COLLABORATOR Kate

Github Link︎︎︎

PAPER Immersive Multimodel Meditation︎︎︎

LINK MediGesture Prototype︎︎︎

CHALLENGE

Create an immersive and multimodel interaction experience for the brand Medi, a startup focusing on enhancing people’s meditation experience.

POTENTIAL

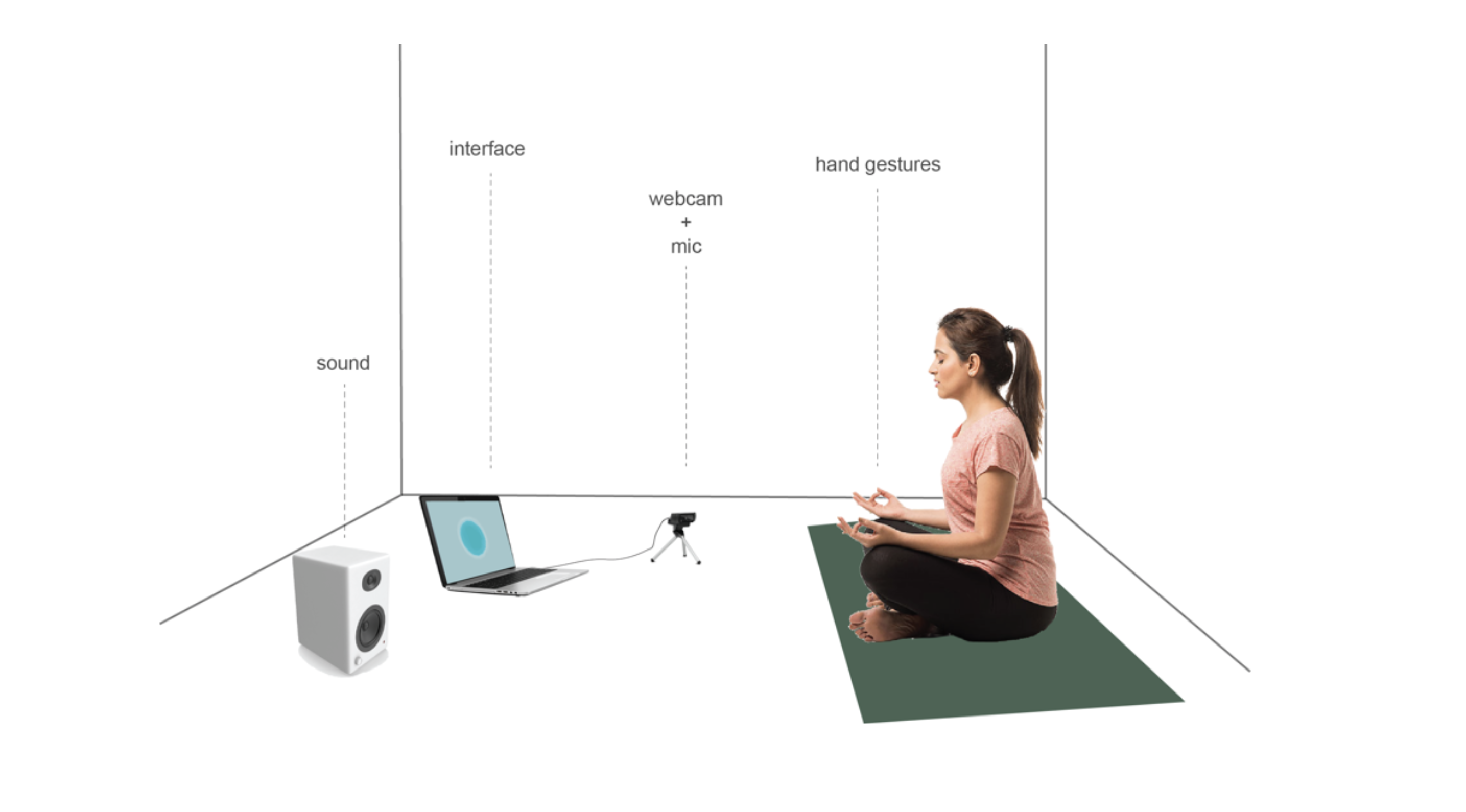

A multimodel voice and hand gesture control experience embedding computer vision and machine learning in the meditation process.

How might we make the

meditation experience more customized and personalized?

MediGesture is a multimodal application which enables users to utilize voice and gesture to control the meditation process and customize their own experience. It is, also, an educational tool which introduces mudras to the one who wants to experience more origin and a fun meditation with the mudras. The interface is created using p5.js, and voice control driven by p5.speech.js, with which users can control the meditation interface with natural language to switch to different modes of the meditation. The hand detection function with a webcam, which enables users to pose mudras, triggers customized chime sounds which aim for a customized meditation experience.

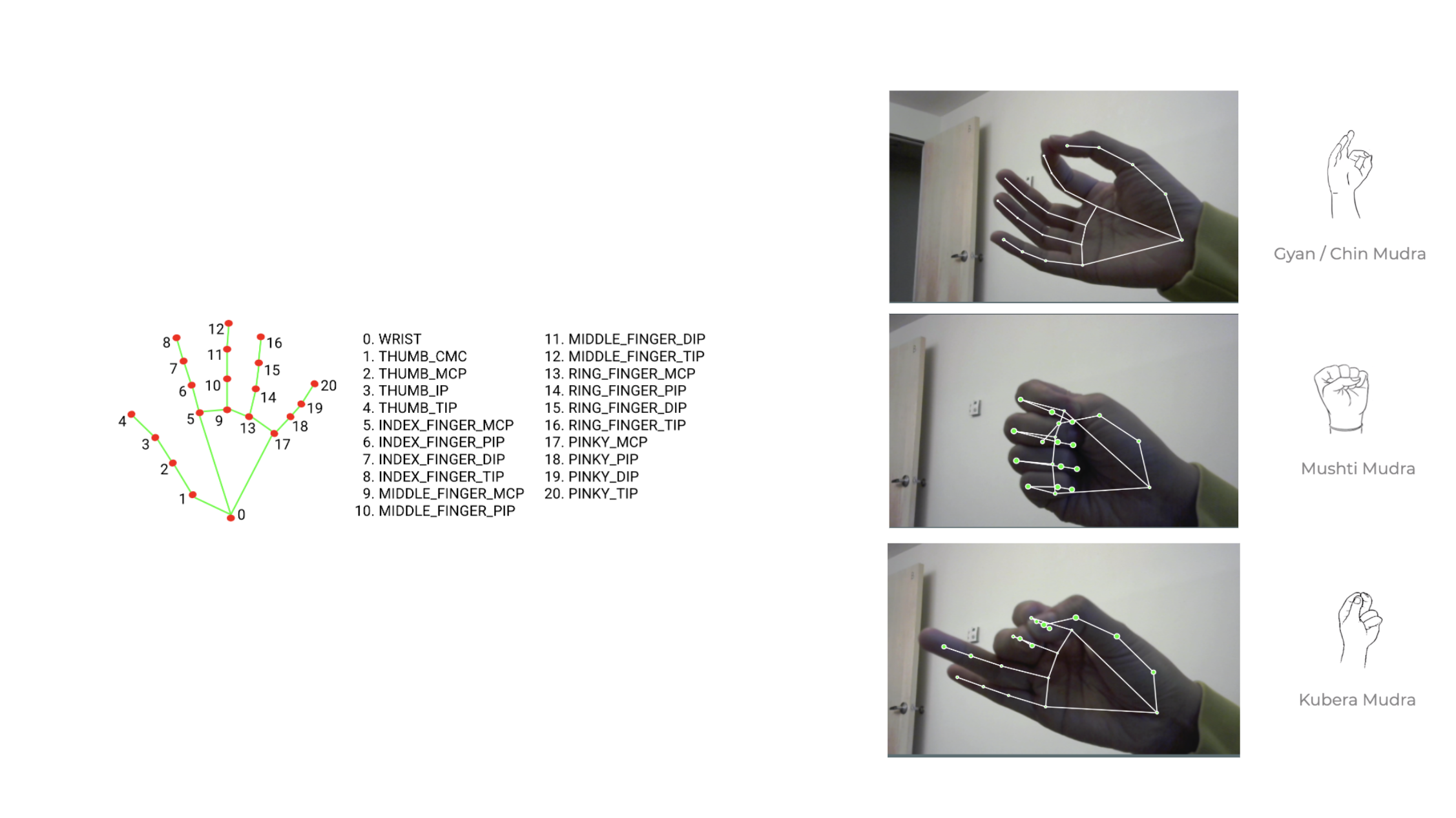

Mudra as an immersive approach

Mudra, a Sanskrit word, used to describe a symbolic hand gesture that has the power of producing joy and happiness in the practice of yoga and meditation. This gesture system has been an integral part of many Hindu and Buddhist rituals and there are more than 399 mudras across various disciplines. Every mudra has a meaning, such as a gesture in which the participant gently joins the tip of the thumb and the index finger, while the other three fingers are simply stretched out of free&slightly bent means the wisdom. In this project, I will select 6-9 key gestures in the Mudras list and correspond them with music and graphics. When users change the mudra, the music and graphic will change based on the meaning of the gestures. And the emotional change will be documented to give insights into the changes.

Interactive Multimodel SystemThrough observation of the daily meditation moment of ourselves and close friends, we saw that different

gestures are used when meditators move into a different phase of meditation, which is known as “mudras” and

contributes to the symbolic meaning of feelings in the meditation process. Considering the meaning of different

hand mudras and the potential of combining it with multimodal interaction, we started to imagine a more

adjustable meditation assistant which can help improve the personal meditation experience with meaningful

gesture and voice control.

Through observation of the daily meditation moment of ourselves and close friends, we saw that different

gestures are used when meditators move into a different phase of meditation, which is known as “mudras” and

contributes to the symbolic meaning of feelings in the meditation process. Considering the meaning of different

hand mudras and the potential of combining it with multimodal interaction, we started to imagine a more

adjustable meditation assistant which can help improve the personal meditation experience with meaningful

gesture and voice control.

Immersive Meditation ExperienceThe outcome is a multimodal application which enables users to utilize voice and gesture to control the meditation

process and customize their own experience. It is, also, an educational tool which introduces mudras to the one who

wants to experience more origin and a fun meditation with the mudras. The interface is created using p5.js, and

voice control driven by p5.speech.js, with which users can control the meditation interface with natural language to

switch to different modes of the meditation. The hand detection function with a webcam, which enables users to

pose mudras, triggers customized chime sounds which aim for a customized meditation experience.

The outcome is a multimodal application which enables users to utilize voice and gesture to control the meditation

process and customize their own experience. It is, also, an educational tool which introduces mudras to the one who

wants to experience more origin and a fun meditation with the mudras. The interface is created using p5.js, and

voice control driven by p5.speech.js, with which users can control the meditation interface with natural language to

switch to different modes of the meditation. The hand detection function with a webcam, which enables users to

pose mudras, triggers customized chime sounds which aim for a customized meditation experience.

PROJECT MediGesture

CLIENTS MediGesture

YEAR 2020

ROLE Concept Design, gesture detection (MediaPipe.js), sound detection, interface (HTML/CSS/Javascript)

COLLABORATOR Kate

Github Link︎︎︎

LINK MediGesture Prototype︎︎︎

PROJECT MediGesture

CLIENTS MediGesture

YEAR 2020

ROLE Concept Design, gesture detection (MediaPipe.js), sound detection, interface (HTML/CSS/Javascript)

COLLABORATOR Kate

Github Link︎︎︎

LINK MediGesture Prototype︎︎︎

CLIENTS MediGesture

YEAR 2020

ROLE Concept Design, gesture detection (MediaPipe.js), sound detection, interface (HTML/CSS/Javascript)

COLLABORATOR Kate

Github Link︎︎︎

LINK MediGesture Prototype︎︎︎